http://imgs.xkcd.com/comics/campfire.png

IBM has given parts of the AIX LVM to Linux, so it would only stand to reason that there is a set of AIX-like commands for linux to use. This shows how to basically create a filesystem. A more comprehensive introduction to LVM is at:

I have created 4 new partitions and got them available to linux for a test:

cd /dev ls brw-r----- 1 root disk 8, 32 Nov 3 10:52 sdc brw-r----- 1 root disk 8, 48 Nov 3 10:52 sdd brw-r----- 1 root disk 8, 64 Nov 3 10:52 sde brw-r----- 1 root disk 8, 80 Nov 3 10:52 sdf

And the LVM command that I find are:

|

lvm lvmchange lvmdiskscan lvmsadc lvmsar |

pvchange pvcreate pvdisplay pvmove pvremove pvresize pvs pvscan |

vgcfgbackup vgcfgrestore vgchange vgck vgconvert vgcreate vgdisplay vgexport vgextend vgimport vgmerge vgmknodes vgreduce vgremove vgrename vgs vgscan vgsplit |

I start with pvcreate:

pvcreate /dev/sdc /dev/sdd /dev/sde /dev/sdf Physical volume "/dev/sdc" successfully created Physical volume "/dev/sdd" successfully created Physical volume "/dev/sde" successfully created Physical volume "/dev/sdf" successfully created

Next I display what I have done:

pvdisplay --- Physical volume --- PV Name /dev/sdb1 VG Name system PV Size 15.00 GB / not usable 0 Allocatable yes PE Size (KByte) 4096 Total PE 3839 Free PE 24 Allocated PE 3815 PV UUID Vydyz4-Njvf-6Wyk-Xl2s-UQM1-VcG4-Gbdk9a --- NEW Physical volume --- PV Name /dev/sdc VG Name PV Size 3.75 GB Allocatable NO PE Size (KByte) 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID dYMnY2-V4By-N21v-s4Bh-nfMz-TKOp-xrmT2s

I already had one volume created (sdb1) and it clearly shows up a little different. The new

ones all say ‘NEW’ for one thing, and don’t yet show Allocatable, probably because they aren’t associated with a logical volume yet. First, they need a volume group, though:

vgcreate testvg /dev/sdc /dev/sdd /dev/sde /dev/sdf Volume group "testvg" successfully created

And the display command shows what we did:

vgdisplay --- Volume group --- VG Name system System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 1 Max PV 0 Cur PV 1 Act PV 1 VG Size 15.00 GB PE Size 4.00 MB Total PE 3839 Alloc PE / Size 3815 / 14.90 GB Free PE / Size 24 / 96.00 MB VG UUID F8PeFV-TIJj-YHqB-SpYv-Qxgn-UuwH-yE8eQH

Just like in AIX, the volume group can now be split into logical volumes:

lvcreate --name testlv1 --size 2G testvg Logical volume "testlv1" created lvcreate --name testlv2 --size 2G testvg Logical volume "testlv2" created lvdisplay --- Logical volume --- LV Name /dev/testvg/testlv1 VG Name testvg LV UUID ixjAYJ-A8Uz-CUga-OCmo-HBvv-OaC0-wkR0pN LV Write Access read/write LV Status available # open 0 LV Size 2.00 GB Current LE 512 Segments 1 Allocation inherit Read ahead sectors 0 Block device 253:1

The maps flag is probably something close to AIX’s lsvg -l:

lvdisplay --maps

--- Logical volume ---

LV Name /dev/testvg/testlv1

VG Name testvg

LV UUID ixjAYJ-A8Uz-CUga-OCmo-HBvv-OaC0-wkR0pN

LV Write Access read/write

LV Status available

# open 0

LV Size 2.00 GB

Current LE 512

Segments 1

Allocation inherit

Read ahead sectors 0

Block device 253:1

--- Segments ---

Logical extent 0 to 511:

Type linear

Physical volume /dev/sdc

Physical extents 0 to 511

Even though we can make reference to volume group names (testvg) directly, logical

volumes seem to be named specifically as their device location. To extend and reduce

the size of the logical volume, we have to say:

lvextend -L 2.5G /dev/testvg/testlv2 Extending logical volume testlv2 to 2.50 GB Logical volume testlv2 successfully resized lvreduce -L 2G /dev/testvg/testlv2 WARNING: Reducing active logical volume to 2.00 GB THIS MAY DESTROY YOUR DATA (filesystem etc.) Do you really want to reduce testlv2? [y/n]: y Reducing logical volume testlv2 to 2.00 GB Logical volume testlv2 successfully resized

Next, lets build a filesystem:

mkfs.ext3 /dev/testvg/testlv1

mke2fs 1.38 (30-Jun-2005)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

262144 inodes, 524288 blocks

26214 blocks (5.00%) reserved for the super user

First data block=0

16 block groups

32768 blocks per group, 32768 fragments per group

16384 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912

Writing inode tables: done

Creating journal (16384 blocks): done

Writing superblocks and filesystem accounting information: done

This filesystem will be automatically checked every 28 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

Finally, all we have to do is mount the filesystem and add a line to /etc/fstab for it:

mkdir /test mount /dev/testvg/testlv1 /test df -u (add to /etc/fstab) /dev/testvg/testlv1 /test ext3 rw,noatime 0 0

Sometimes when you try to DLPAR something from the HMC, it fails to communicate, it is because some daemons aren’t running on the client.

I have run this on a vio server. I don’t know if it will affect a client that is already using rsct for something else (HACMP):

to reset the deamons

usr/sbin/rsct/install/bin/recfgct

/usr/sbin/rsct/bin/rmcctrl -p

/usr/sbin/rsct/bin/rmcctrl -z

/usr/sbin/rsct/bin/rmcctrl -A

| dlmpr – utility for clearing Hitachi SAN HDLM persistent reservation | |

| Description

The persistent reservation of a logical unit (LU) may not be canceled due to some reason when multiple hosts share a volume group rather than making up a cluster configuration using HACMP. In this case, this utility clears the Reservation Key to cancel the persistent reservation. # /usr/DynamicLinkManager/bin/dlmpr {{-k | -c} [hdiskn] | -h} where: -k: specify this parameter to display the Reservation Key. The utility displays an asterisk (*) for a Reservation Key of another host. If the Reservation Key is not set, [0x0000000000000000] is displayed. -Regist Key: the registered Keys are displayed. -Key Count: the number of registered Keys is displayed. -c: specify this parameter to clear the Reservation Key. hdiskn: specify the physical volume (hdiskn) for which you want to display the Reservation Key. You can specify more than one volume. Note: If you do not specify this parameter, the utility displays the Reservation Keys for all the physical volumes. -a: when multiple physical volumes (hdiskn) are specified, even if an error occurs during processing, the processing continues for all physical volumes. -h: displays the format of the utility for clearing HDLM persistent reservation. Note: [0x????????????????] appears for Reservation Key if the destination storage subsystem does not support the persistent reservation or if a hardware error occurs. Example to execute the dlmpr utility to display the Reservation Keys for hdisk1, hdisk2, hdisk3, hdisk4, hdisk5, and hdisk6: # /usr/DynamicLinkManager/bin/dlmpr -k hdisk1 hdisk2 hdisk3 hdisk4 hdisk5 hdisk6 self Reservation Key : [0xaaaaaaaaaaaaaaaa] Example to execute the dlmpr utility to clear the Reservation Keys for other hosts (marked by an asterisk (*)): # /usr/DynamicLinkManager/bin/dlmpr -c hdisk2 hdisk3 |

|

| Example

dlmpr -k hdisk1; dlmpr -c hdisk2 |

#!/bin/ksh

# Written by John Rigler

# 10/04/2006

# Figure out what parameter was given

case "$1"

in

fscsi?*) CMD="fscsi" ;;

fcs?*) CMD="fcs" ;;

*) CMD="usage";;

esac

# Get fcs if you were given fscsi

if [[ $CMD = fscsi ]]

then

lsdev -Cl $1 | cut -c 18-22 | read FCSNO

lsdev -Cc adapter | grep $FCSNO | read FCS TRASH

fi

if [[ $CMD = fcs ]]

then

FCS=$1

fi

# Run it

if [[ $CMD != usage ]]

then

lscfg -vl $FCS | grep Network | read LINE

for SNIPPET in 29-30 31-32 33-34 35-36 37-38 39-40 41-42 43-44

do

echo $LINE | cut -c $SNIPPET

done | xargs -n8 | sed 's/\ /:/g'

else

echo "Usage: $0 fcs# or fscsi# <---- will return WWPN"

fi

538 The configuration manager is going to invoke a configuration method.

Tonight, we had to reboot one of our servers after an old version of powerpath freaked out while discovering LUNs. The LUNs were discovered again on boot and we set on 538 for about 15 minutes. When you are used to the whole LPAR coming up in less then 10 minutes, this can be scary, but just as we were about to make other plans, the led moved on and cfgmgr finished.

When trying to bring the same LUNs online in normal mode, the server would hang on 538 forever for some reason, I suspect it is because we are at 5200-08 and powerpath 3.0.4.0, really old stuff.

Of course something similar happens when installing upgrades, it seems to hang forever.

If the network config is wrong, it will get past config manager and hang on NSF or something like that. In this case, I usually boot up with an alternate profile that doesn’t have any network adapters, then from the console I just rmdev everything and then reboot back with my old profile. Works every time.

We saw this again later and it took more like 40 minutes but then came up.

So we all know how to write a boot image to a disk (bosboot -ad /dev/hdisk0) and how to set the bootlist (bootlist -m normal hdisk0 hdisk1).

You also want to check in the /dev directory to make sure you have your links set up correctly:

# ls -l | grep -i ipl

crw-rw—- 1 root system 10, 0 Jul 14 2006 IPL_rootvg

crw-rw—- 2 root system 10, 1 Jul 14 2006 ipl_blv (should be same as rhd5)

crw——- 2 root system 20, 0 Aug 13 11:10 ipldevice (should be same as rhdisk1, in this case)

# ls -l | grep hdisk1

brw——- 1 root system 20, 0 Aug 13 11:20 hdisk1

crw——- 2 root system 20, 0 Aug 13 11:10 rhdisk1

# ls -l | grep hd5

brw-rw—- 1 root system 10, 1 Aug 13 11:37 hd5

crw-rw—- 2 root system 10, 1 Jul 14 2006 rhd5

If you see something wrong, for example ipldevice points to the wrong disk, just rm the ipldevice pseudofile and relink it ( ln rhdisk1 ipldevice )

Also, support has me run this command that list out all bootable disks even if they are’t part of rootvg ( you get that with vio disks that are in rootvg for a client):

# ipl_varyon -i

PVNAME BOOT DEVICE PVID VOLUME GROUP ID

hdisk0 NO 00031691bced4a4e0000000000000000 00c1b3da00004c00

hdisk2 NO 00cdeaeadfcd0ebc0000000000000000 00c1b3da00004c00

hdisk1 YES 00031691bcd549a60000000000000000 00cdeaea00004c00

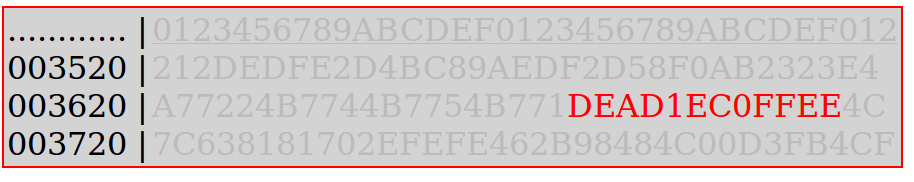

Lquerypv will simply read the data from the disk and display it in a format similar to octal dump (od). In the example below, we see the PVID written to the disk at location 80. You seem to be able to read anything that you point lquerypv at (I tried /etc/motd and read it just fine). This is great for reading the PVID of a logical volume on a vio server that is pretending to be a virtual disk on a client since you can’t see that information with lspv. Lquerypv is also a great command for figuring out where disk access issues are. If lquerypv returns any data, then you can read the disk and it isn’t a reserve issue. If it can’t read any data, and just hangs or returns nothing, then ABSOLUTELY NO OTHER AIX COMMAND WILL WORK. At this point you should stop looking at your filesystems or volume groups and logical volumes. The issue is that you simply can’t read the disks, and you need to either go to the vio server and see if there is a problem there or use lsattr -El hdisk0 to check the scsi reserve (on another system that might be sharing the disk). If you the issue is on your VIO server, or you have direct-attached SAN disks, then ask your SAN administrators to check their stuff. If, however queries against all of our disk hang, especially during an initial install, then maybe your client SAN software is messed up, you could try to remove it and use the MPIO version or just re-install it. The clearest sign of one disk with a reserve lock at the san level is when lquerypv returns nothing and lquerypv against other disks works fine.

# lspv hdisk0 00031691bced4a4e oraclevg active hdisk2 00cdeaeadfcd0ebc oraclevg active hdisk1 00031691bcd549a6 rootvg active # lquerypv -h /dev/hdisk0 00000000 C9C2D4C1 00000000 00000000 00000000 |................| 00000010 00000000 00000000 00000000 00000000 |................| 00000020 00000000 00000000 00000000 00000000 |................| 00000030 00000000 00000000 00000000 00000000 |................| 00000040 00000000 00000000 00000000 00000000 |................| 00000050 00000000 00000000 00000000 00000000 |................| 00000060 00000000 00000000 00000000 00000000 |................| 00000070 00000000 00000000 00000000 00000000 |................| 00000080 00031691 BCED4A4E 00000000 00000000 |......JN........| 00000090 00000000 00000000 00000000 00000000 |................| 000000A0 00000000 00000000 00000000 00000000 |................| 000000B0 00000000 00000000 00000000 00000000 |................| 000000C0 00000000 00000000 00000000 00000000 |................| 000000D0 00000000 00000000 00000000 00000000 |................| 000000E0 00000000 00000000 00000000 00000000 |................| 000000F0 00000000 00000000 00000000 00000000 |................| #

Lqueryvg bypasses LVM altogether and reads the VGDA off of any disk that is a member of a volume group. Sometimes when LVM and VGDA get out of sync with each other, the volume group information here can be a great help. Think of this information as what great read from the disk when you do an importvg.

# lqueryvg -Atp hdisk0

Max LVs: 256

PP Size: 28

Free PPs: 959

LV count: 4

PV count: 2

Total VGDAs: 3

Conc Allowed: 0

MAX PPs per PV 1016

MAX PVs: 32

Quorum (disk): 0

Quorum (dd): 0

Auto Varyon ?: 0

Conc Autovaryo 0

Varied on Conc 0

Logical: 00c1b3da00004c0000000112982a7298.1 loglv02 1

00c1b3da00004c0000000112982a7298.3 fslv00 3

00c1b3da00004c0000000112982a7298.4 fslv02 3

00c1b3da00004c0000000112982a7298.5 fslv04 3

Physical: 00cdeaeadfcd0ebc 2 0

00031691bced4a4e 1 0

Total PPs: 1617

LTG size: 128

HOT SPARE: 0

AUTO SYNC: 0

VG PERMISSION: 0

SNAPSHOT VG: 0

IS_PRIMARY VG: 0

PSNFSTPP: 4352

VARYON MODE: 0

VG Type: 0

Max PPs: 32512

#

In AIX, you can create devices by simply running the ‘cfgmgr’ command which walks the bus or virtual bus and starts defining a new structure of adapters and disks, or tapes or whatever. And sometimes it is really advantageous to have the numbering of the devices skip a little bit, to sync with another system that sees the same disks. ‘dummydisk’ is a small self-referencing command that creates bogus ODM entries that look however you want them. Then you simply run ‘cfgmgr’ and devices that you want to skip will appear taken. So far I don’t have a version or an installp package and they can only be removed with the dummydev command, but that is what version 0.1 is all about:

#!/bin/ksh

###############################################################

# Title : dummydisk - Creates a placeholder disk

# Author : John Rigler

# Date : 7/31/2008

# Requires : ksh

# Web : http://deadlycoffee.com/?p=12

###############################################################

# This script creates phantom disks that don't actually do

# anything but makes it easier to line up disk names on

# different servers. Just create dummy disk up to the actual

# disk that you need, then when you run cfgmgr, your new disk

# or disks will be created with the correct id. It is possible

# to create your disks with mkdev, but who the heck does that?

# You can't yet delete these disks with rmdev because the config

# method is wrong. Once I get some time, I will add entries to

# the Pd tables to make rmdev -dl work.

################################################################

case $1 in

-a ) grep "^##S" $0 | cut -c 4- | sed "s/REPLACENAME/$2/g" | odmadd ;;

-d ) odmdelete -o CuDv -q "name = $2" ;

odmdelete -o CuAt -q "name = $2" ;;

* ) echo;

echo "To add a dummy device: $0 -a devicename";

echo "To delete a dummy devices: $0 -d devicename" ;

echo ;;

esac

##SCuDv:

##S name = "REPLACENAME"

##S status = 1

##S chgstatus = 2

##S ddins = "dummydev"

##S location = "FF-FF-FF-FF,F"

##S parent = "scsi2"

##S connwhere = "FF,F"

##S PdDvLn = "disk/scsi/scsd"

##S

##SCuAt:

##S name = "REPLACENAME"

##S attribute = "unique_id"

##S value = "deadlyc0ffee000000000000"

##S type = "R"

##S generic = ""

##S rep = "n"

##S nls_index = 0

##S

##SCuAt:

##S name = "REPLACENAME"

##S attribute = "pvid"

##S value = "000dummydisk0000 "

##S type = "R"

##S generic = "D"

##S rep = "s"

##S nls_index = 11

##S

##SCuDvDr:

##S resource = "devno"

##S value1 = "99"

##S value2 = "0"

##S value3 = "REPLACENAME"